Avoiding the Kubernetes Cost Trap

How do you ensure your Kubernetes costs are valid? Understand whether you are spending or wasting resources.

Kubernetes has engulfed the Developer community with its popularity. It’s still a wonder offered on a silver platter (as it is open-source). The increasing Kubernetes costs come as no surprise when it has set the de facto standard of container orchestration.

Yet, here we are talking about putting curbs on our Kubernetes bills. There’s a key differentiator to think through these costs – is it spending or wasting resources? If we’re expanding our infrastructure, it’s obvious that there will be a rise in Kubernetes costs. However, while our plans to expand validate the prices, they don’t guarantee efficient usage of resources.

Moving forward, we will discuss certain scenarios where we can save some dollars. To begin with, let’s start assessing these 4 questions –

1. What are the critical KPIs you should be considering?

2. How do we connect the application constructs with our KPIs?

3. How do we structure the costs of our cloud services?

4. What are the areas for optimization?

What Are the Critical KPIs You Should Be Considering?

When we are beginning to build something new, let’s consider thinking as a startup, it’s natural to consider the cost of setting up new infrastructure. As the organization scales, new services are added, and team size increases, this quickly leads to teams working in silos and reporting on different projects.

Suppose you have built new microservices on Kubernetes, and the

engineering team is giving you the report on how much everything costs or the

Kubernetes cost of the entire setup. However, in the long run, what do you want

to keep tabs on – “how much do

you spend in maintaining this cloud service?” or “what are the returns on this particular

cloud service?”

Here, we need to focus on aligning our cost of service(s) with business

production.

How Do We Connect the Application Constructs to Our KPIs?

Suppose your organization has aligned on a KPI – cost per product

line. To have an easy differentiation of the costs associated with

an application, we need to know which product line it’s related to. For that to

happen, the teams need to clearly demarcate their Kubernetes infrastructure

with proper labels.

If these Kubernetes costs are segregated among different teams, it becomes

easier to set accountability for all the expenses with Kubernetes deployment.

However, when the business scales and the engineering team size increases we

often observe different naming conventions.

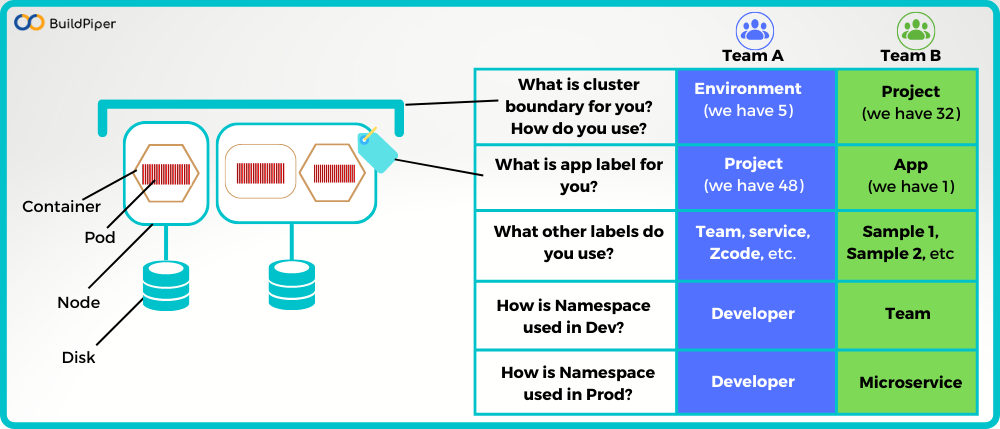

Kubernetes cloud infrastructure cost labels.

Here, in this diagram we notice

- The naming conventions differ for different teams – names are chosen in terms of their interpretations and values.

- This makes it difficult to determine the cost of deployment with Kubernetes for different teams.

Standardized naming conventions should be followed while defining the labels in the Kubernetes infrastructure so that we ensure consistency and governance. Thereby, helping in building accountability.

How Do We Structure the Costs of Cloud Services?

While aiming to optimize the cost of deployment with Kubernetes, the best way to keep an eye on costs is through resource requests. Developers can control the amount of CPU and memory resources per container by setting an estimate in the request field in the configuration file. There are two different ways to go about this:

1. By the amount provisioned

2. By the amount used

|

By Provisioned Resources |

By the Actual Usage |

|

|

Advantages. |

|

|

|

||

|

Disadvantages |

|

|

|

A point to note is that there can be common services used by everyone – how do we distribute those costs?

What Are the Areas for Kubernetes Cost Optimization?

- Pod

rightsizing

- Node

rightsizing

- Autoscaling

(cluster autoscaling, horizontal pod autoscaling)

- Taking

advantage of cloud discounts (reserved instances, spot, savings plans)

Pod Rightsizing

Referring to Kubernetes Documentation, when we declare the resource requests and limit at face value, Kubernetes takes into account and maintains the minimum request value but allows consumption up to the limit value.

To reduce cost:

- While setting resource requests and limits you should ensure that they enable enough resources for optimal performance and avoid wastage.

- In order to do this, you need to examine your pod usage and application performance by adjusting requests and limits

-

Kubernetes

does offer a tool called Vertical Pod Autoscaler (VPA).

This helps in automatically allocating more or less CPU and memory to the existing pods.

Node Rightsizing

Suppose we have a node with 10 CPUs and 10 GB of RAM that costs around $100 per month. We have a workload that needs 4 CPUs and 4 GB of RAM. Now, the maximum our node can support is 2 pods of this workload, and we have 3. The remaining memory is not sufficient for a third one, which can make us add a new node for the third pod. This entails a waste of resources while driving up our costs. A suitable node capacity in our scenario would be like 8 CPUs and 8 GB of RAM.

There can be another hurdle – in terms of performance. The more pods you have on a node, the slower will be your operations and it can get unreliable.

Key takeaway –

Just like we have pod rightsizing, we do need to pay attention to node rightsizing. If the memory and resource distribution are not optimum, it may hamper the overall application performance. Pay attention to the requirements for better performance and cut what’s excess.

Managed Services like Google Kubernetes Engine (GKE) and Azure Kubernetes Service (AKS) impose limits on the number of pods per node. The maximum number of pods per node for GKE is 256 and for AKS the default limit is 30 pods per node, though it can be raised to 250.

Autoscaling

If the traffic received by your services is predictable, with rare incidents of fluctuation, it makes sense to keep track of resource requirements. However, if it’s something like an eCommerce website when the traffic patterns are unpredictable given season-end sales or an OTT platform with its periodic new launches, it’s not easy to put limits on memory and resource usage. This is where we have:

1. The Horizontal Pod Autoscaler (HPA) –

Dynamic maintaining of the increase or decrease in the number of pods based on

the observed utilization of CPU and memory.

2. The Cluster Autoscaler-

It automatically increases or decreases the size of the Kubernetes cluster

by adding or removing nodes as per pod and node utilization metrics.

Taking Advantage of Cloud Discounts

Major public cloud providers offer multiple pricing options. They equally apply to the containerized infrastructure (Kubernetes) as much as they do to non-containerized infrastructure.

- On-demand Instances: Pay-as-you-go model where you pay per hour, minute, or second for the instances that we launch

- Reserved Instances: When you know your requirements, you can pay for them upfront. Paying for something for, say 3 years or 5 years, a long-term commitment, helps us in saving approximately 40%.

- Spot Instances: These are the unused instances at the cloud provider’s data centre. Their prices are way lesser than the on-demand instances and reserved instances. But they are volatile.

These go by different names: AWS calls them “spot instances,” GCP calls them “preemptible VMs,” and Azure calls them “spot VMs.” Call them anything, and the purpose is the same, users can request them by paying the price (which is lesser) and they can be withdrawn when the On-demand and Reserved instance customers need them.

Conclusion

Containers have truly revolutionized the way we can develop and deploy applications. Its obvious portability plays a vital role in developing the whole idea of scalability and independent development.

Organizations, therefore, want to build various container management tools in-house for handling the complete cluster management and optimizing the cost of deployment with Kubernetes, all by themselves. Yet, somewhere down the line, they are not able to justify the investment of time and resources in Kubernetes management with the business goals.

Even to come up with great solutions for Kubernetes cost optimization we need complete visibility of our containerized environment. Today the market is flooded with Managed Kubernetes Cloud Services and also Managed Kubernetes Enterprise-grade platforms. With these services in place, organizations are more willing to hand over their keys to k8s clusters and direct their focus on their core KPIs.

We Provide consulting, implementation, and management services on DevOps, DevSecOps, Cloud, Automated Ops, Microservices, Infrastructure, and Security

Services offered by us: https://www.zippyops.com/services

Our Products: https://www.zippyops.com/products

Our Solutions: https://www.zippyops.com/solutions

For Demo, videos check out YouTube Playlist: https://www.youtube.com/watch?v=4FYvPooN_Tg&list=PLCJ3JpanNyCfXlHahZhYgJH9-rV6ouPro

If this seems interesting, please email us at [email protected] for a call.

Relevant Blogs:

17 DevOps Metrics You Should Be Tracking

Why Kubernetes Observability Is Essential for Your Organization

Recent Comments

No comments

Leave a Comment

We will be happy to hear what you think about this post