Mastering Distributed Caching on AWS: Strategies, Services, and Best Practices

Distributed caching on AWS enhances app performance and scalability. AWS provides ElastiCache (Redis, Memcached) and DAX for implementation.

Distributed caching is a method for storing and managing data across multiple servers, ensuring high availability, fault tolerance, and improved read/write performance. In cloud environments like AWS (Amazon Web Services), distributed caching is pivotal in enhancing application performance by reducing database load, decreasing latency, and providing scalable data storage solutions.

Understanding Distributed Caching

Why Distributed Caching?

With applications increasingly requiring high-speed data processing, traditional single-node caching systems can become bottlenecks. Distributed caching helps overcome these limitations by partitioning data across multiple servers, allowing simultaneous read/write operations, and eliminating points of failure associated with centralized systems.

Key Components

In a distributed cache, data is stored in a server cluster. Each server in the cluster stores a subset of the cached data. The system uses hashing to determine which server will store and retrieve a particular data piece, thus ensuring efficient data location and retrieval.

AWS Solutions for Distributed Caching

Amazon ElastiCache

Amazon ElastiCache is a popular choice for implementing distributed caching on AWS. It supports key-value data stores and offers two engines: Redis and Memcached.

Redis

ElastiCache for Redis is a fully managed Redis service that supports data partitioning across multiple Redis nodes, a feature known as sharding. This service is well-suited for use cases requiring complex data types, data persistence, and replication.

Memcached

ElastiCache for Memcached is a high-performance, distributed memory object caching system. It is designed for simplicity and scalability and focuses on caching small chunks of arbitrary data from database calls, API calls, or page rendering.

DAX: DynamoDB Accelerator

DAX is a fully managed, highly available, in-memory cache for DynamoDB. It delivers up to a 10x read performance improvement — even at millions of requests per second. DAX does all the heavy lifting required to add in-memory acceleration to your DynamoDB tables without requiring developers to manage cache invalidation, data population, or cluster management.

Implementing Caching Strategies

Write-Through Cache

In this strategy, data is simultaneously written into the cache and the corresponding database. The advantage is that the data in the cache is never stale, and the read performance is excellent. However, write performance might be slower as the cache and the database must be updated together.

Lazy-Loading (Write-Around Cache)

With lazy loading, data is only written to the cache when it's requested by a client. This approach reduces the data stored in the cache, potentially saving memory space. However, it can result in stale data and cache misses, where requested data is unavailable.

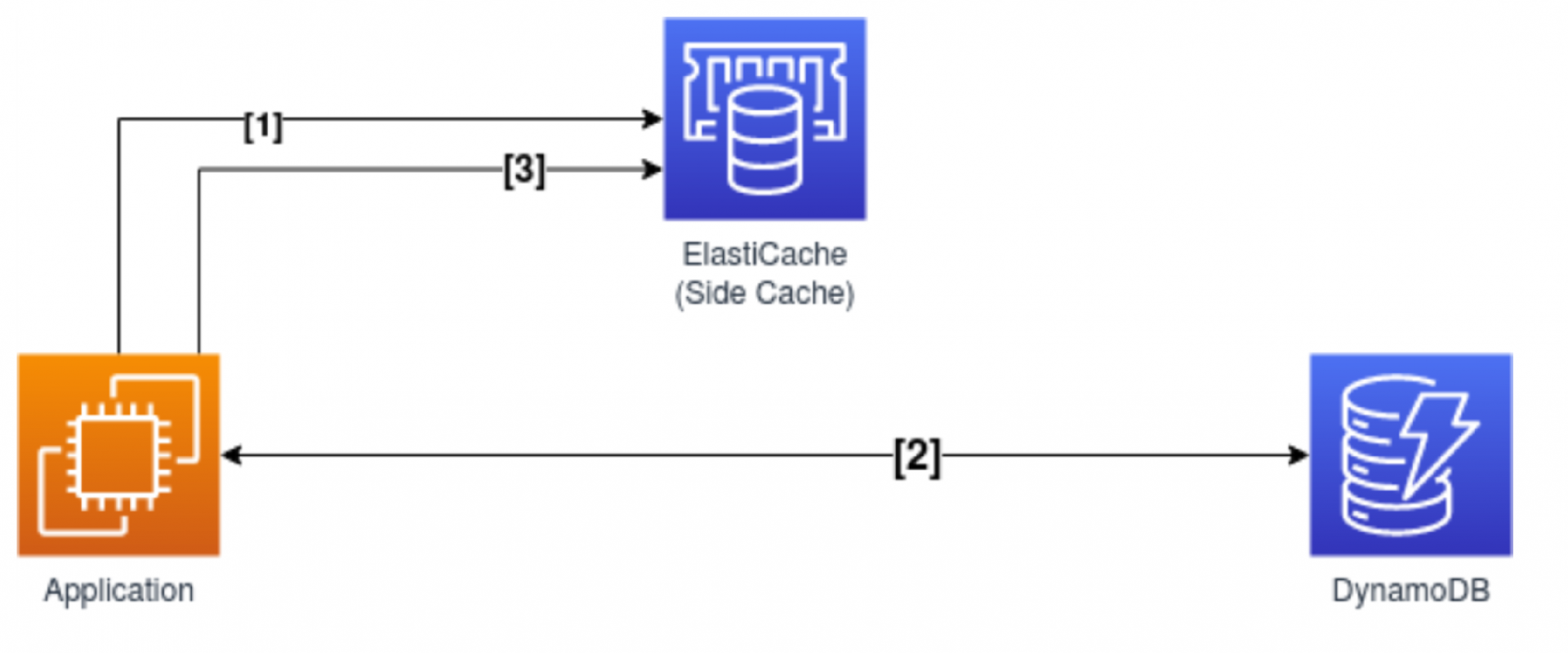

Cache-Aside

In the cache-aside strategy, the application is responsible for reading from and writing to the cache. The application first attempts to read data from the cache. If the data is not found (a cache miss), it's retrieved from the database and stored in the cache for future requests.

TTL (Time-To-Live) Eviction

TTL eviction is crucial for managing the lifecycle of data in caches. Assigning a TTL value to each data item automatically evicts items from the cache once the TTL expires. This strategy is useful for ensuring that data doesn't occupy memory space indefinitely and helps manage cache size.

Monitoring and Optimization

Monitoring With Amazon CloudWatch

Amazon CloudWatch provides monitoring services for AWS cloud resources. With CloudWatch, you can collect and track metrics, collect and monitor log files, and set alarms. For distributed caching, CloudWatch allows you to monitor metrics like cache hit-and-miss rates, memory usage, and CPU utilization.

Optimization Techniques

To maximize the efficiency of a distributed cache, consider data partitioning strategies, load balancing, read replicas for scaling read operations, and implementing failover mechanisms for high availability. Regular performance testing is also critical to identify bottlenecks and optimize resource allocation.

FAQs

How Do I Choose Between ElastiCache Redis and ElastiCache Memcached?

Your choice depends on your application's needs. Redis is ideal if you require support for rich data types, data persistence, and complex operational capabilities, including transactions and pub/sub messaging systems. It's also beneficial for scenarios where automatic failover from a primary node to a read replica is crucial for high availability. On the other hand, Memcached is suited for scenarios where you need a simple caching model and horizontal scaling. It's designed for simplicity and high-speed caching for large-scale web applications.

What Happens if a Node Fails in My Distributed Cache on AWS?

For ElastiCache Redis, AWS provides a failover mechanism. If a primary node fails, a replica is promoted to be the new primary, minimizing the downtime. However, for Memcached, the data in the failed node is lost, and there's no automatic failover. In the case of DAX, it's resilient because the service automatically handles the failover seamlessly in the background, redirecting the requests to a healthy node in a different Availability Zone if necessary.

How Can I Secure My Cache Data in Transit and Rest on AWS?

AWS supports in-transit encryption via SSL/TLS, ensuring secure data transfer between your application and the cache. For data at rest, ElastiCache for Redis provides at-rest encryption to protect sensitive data stored in cache memory and backups. DAX also offers similar at-rest encryption. Additionally, both services integrate with AWS Identity and Access Management (IAM), allowing detailed access control to your cache resources.

How Do I Handle Cache Warming in a Distributed Caching Environment?

Cache warming strategies depend on your application's behavior. After a deployment or node restart, you can preload the cache with high-usage keys, ensuring hot data is immediately available. Automating cache warming processes through AWS Lambda functions triggered by specific events is another efficient approach. Alternatively, a gradual warm-up during the application's standard operations is simpler but may lead to initial performance dips due to cache misses.

Can I Use Distributed Caching for Real-Time Data Processing?

Yes, both ElastiCache Redis and DAX are suitable for real-time data processing. ElastiCache Redis supports real-time messaging and allows the use of data structures and Lua scripting for transactional logic, making it ideal for real-time applications. DAX provides microsecond latency performance, which is crucial for workloads requiring real-time data access, such as gaming services, financial systems, or online transaction processing (OLTP) systems. However, the architecture must ensure data consistency and efficient read-write load management for optimal real-time performance.

Conclusion

Implementing distributed caching on AWS can significantly improve your application's performance, scalability, and availability. By leveraging AWS's robust infrastructure and services like ElastiCache and DAX, businesses can meet their performance requirements and focus on building and improving their applications without worrying about the underlying caching mechanisms. Remember, the choice of the caching strategy and tool depends on your specific use case, data consistency requirements, and your application's read-write patterns. Continuous monitoring and optimization are key to maintaining a high-performing distributed caching environment.

We ZippyOPS Provide consulting, implementation, and management services on DevOps, DevSecOps, DataOps, MLOps, AIOps, Cloud, Automated Ops, Microservices, Infrastructure, and Security

Services offered by us: https://www.zippyops.com/services

Our Products: https://www.zippyops.com/products

Our Solutions: https://www.zippyops.com/solutions

For Demo, videos check out YouTube Playlist: https://www.youtube.com/watch?v=4FYvPooN_Tg&list=PLCJ3JpanNyCfXlHahZhYgJH9-rV6ouPro

If this seems interesting, please email us at [email protected] for a quick call.

Relevant Blogs:

How to Choose the Best Encryption Methods for Databases

The Database CI/CD Best Practice With GitHub

Database Backup Security: A Beginner’s Guide

A Deep Dive Into Distributed Tracing

Recent Comments

No comments

Leave a Comment

We will be happy to hear what you think about this post