Microservices Deployment Patterns

Deployment strategies for Microservices.

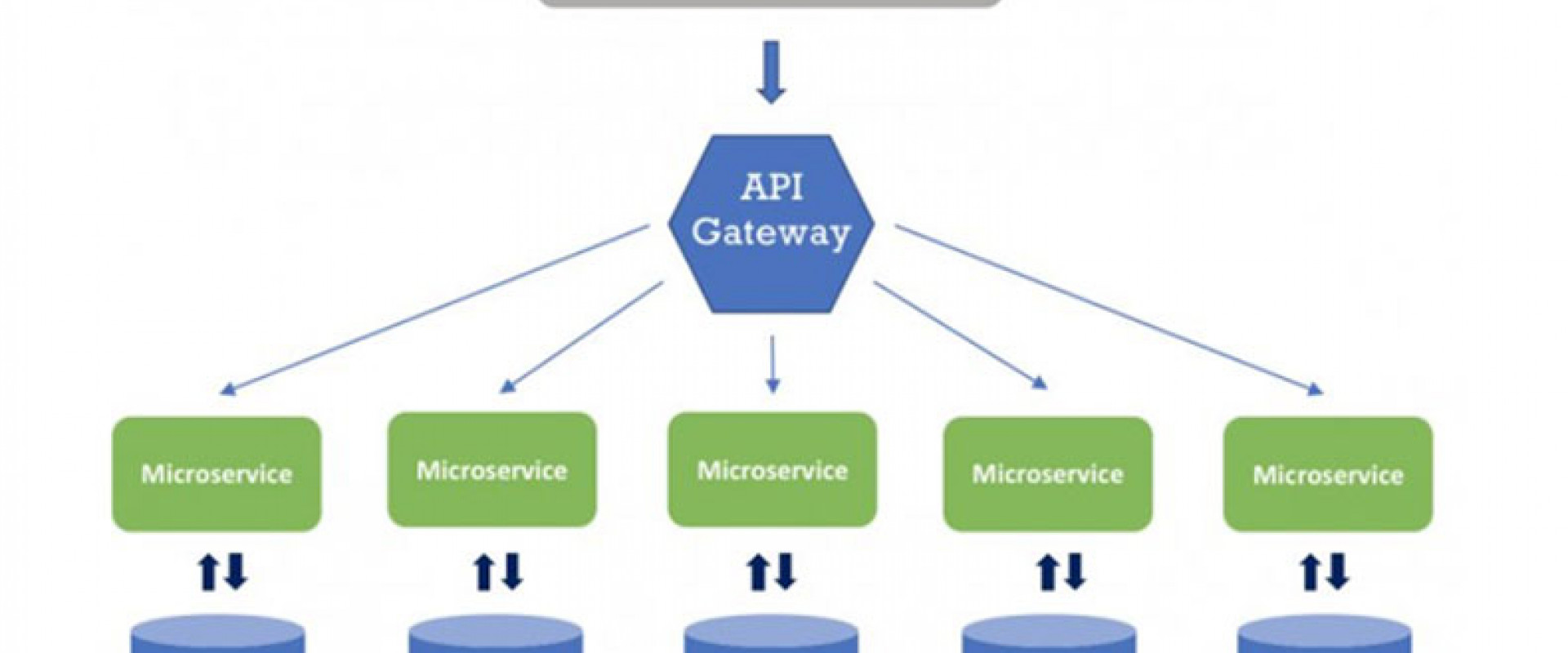

Microservices are the trend of the hour. Businesses are moving towards cloud-native architecture and breaking their large applications into smaller, self-independent modules called microservices. This architecture gives a lot more flexibility, maintainability, and operability, not to mention better customer satisfaction.

With these added advantages, architects and operations engineers face many new challenges as well. Earlier, they were managing one application; now they have to manage many instead. Each application again needs its own support services, like databases, LDAP servers, messaging queues, and so on. So the stakeholders need to think through different strategies for deployment where the entire application can be well deployed while maintaining its integrity and providing optimal performance.

Deployment Patterns

The microservices architects suggest different types of patterns that could be used to deploy microservices. Each design provides solutions for diverse functional and non-functional requirements.

So microservices could be written in a variety of programming languages or frameworks. Again, they could be written in different versions of the same programming language or framework. Each microservice comprises several different service instances, like the UI, DB, and backend. The microservice must be independently deployable and scalable. The service instances must be isolated from each other. The service must be able to quickly build and deploy itself. The service must be allocated proper computing resources. The deployment environment must be reliable, and the service must be monitored.

Multiple Service Instances per Host

To meet the requirements mentioned at the start of this section, we can think of a solution with which we can deploy service instances of multiple services on one host. The host may be physical or virtual. So, we are running many service instances from different services on a shared host.

There are different ways we could do it. We can start each instance as a JVM process. We can also start multiple instances as part of the same JVM process, kind of like a web application. We can also use scripts to automate the start-up and shutdown processes with some configurations. The configuration will have different deployment-related information, like version numbers.

With this kind of approach, the resources could be used very efficiently.

Service Instance per Host

In many cases, microservices need their own space and a clearly separated deployment environment. In such cases, they can’t share the deployment environment with other services or service instances. There may be a chance of resource conflict or scarcity. There might be issues when services written in the same language or framework but with different versions can’t be co-located.

In such cases, a service instance could be deployed on its own host. The host could either be a physical or virtual machine.

In such cases, there wouldn’t be any conflict with other services. The service remains entirely isolated. All the resources of the VM are available for consumption by the service. It can be easily monitored.

The only issue with this deployment pattern is that it consumes more resources.

Service Instance per VM

In many cases, microservices need their own, self-contained deployment environment. The microservice must be robust and must start and stop quickly. Again, it also needs quick upscaling and downscaling. It can’t share any resources with any other service. It can’t afford to have conflicts with other services. It needs more resources, and the resources must be properly allocated to the service.

In such cases, the service could be built as a VM image and deployed in a VM.

Scaling could be done quickly, as new VMs could be started within seconds. All VMs have their own computing resources that are properly allocated according to the needs of the microservice. There is no chance of any conflict with any other service. Each VM is properly isolated and can get support for load balancing.

Service Instance per Container

In some cases, microservices are very tiny. They consume very few resources for their execution. However, they need to be isolated. There must not be any resource sharing. They again can’t afford to be co-located and have a chance of conflict with another service. It needs to be deployed quickly if there is a new release. There might be a need to deploy the same service but with different release versions. The service must be capable of scaling rapidly. It also must have the capacity to start and shut down in a few milliseconds.

In such a case, the service could be built as a container image and deployed as a container.

In that case, the service will remain isolated. There would not be any chance of conflict. Computing resources could be allocated as per the calculated need of the service. The service could be scaled rapidly. Containers could also be started and shut down quickly.

Serverless Deployment

In certain cases, the microservice might not need to know the underlying deployment infrastructure. In these situations, the deployment service is contracted out to a third-party vendor, who is typically a cloud service provider. The business is absolutely indifferent about the underlying resources; all it wants to do is run the microservice on a platform. It pays the service provider based on the resources consumed from the platform for each service call. The service provider picks the code and executes it for each request. The execution may happen in any executing sandbox, like a container, VM, or whatever. It is simply hidden from the service itself.

The service provider takes care of provisioning, scaling, load-balancing, patching, and securing the underlying infrastructure. Many popular examples of serverless offerings include AWS Lambda, Google Functions, etc.

The infrastructure of a serverless deployment platform is very elastic. The platform scales the service to absorb the load automatically. The time spent managing the low-level infrastructure is eliminated. The expenses are also lowered as the microservices provider pays only for the resources consumed for each call.

Service Deployment Platform

Microservices can also be deployed on application deployment platforms. By providing some high-level services, such platforms clearly abstract out the deployment. The service abstraction can handle non-functional and functional requirements like availability, load balancing, monitoring, and observability for the service instances. The application deployment platform is thoroughly automated. It makes the application deployment quite reliable, fast, and efficient.

Examples of such platforms are Docker Swarm, Kubernetes, and Cloud Foundry, which is a PaaS offering.

Conclusion

Microservices deployment options and offerings are constantly evolving. It's possible that a few more deployment patterns will follow suit. Many of these patterns mentioned above are very popular and are being used by most microservice providers. They are very successful and reliable. But with changing paradigms, administrators are thinking of innovative solutions.

We ZippyOPS, Provide consulting, implementation, and management services on DevOps, DevSecOps, Cloud, Automated Ops, Microservices, Infrastructure, and Security

Services offered by us: https://www.zippyops.com/services

Our Products: https://www.zippyops.com/products

Our Solutions: https://www.zippyops.com/solutions

For Demo, videos check out YouTube Playlist: https://www.youtube.com/watch?v=4FYvPooN_Tg&list=PLCJ3JpanNyCfXlHahZhYgJH9-rV6ouPro

Relevant Blogs:

DevOps: How to Adapt It to An Agile Methodology

DevOps Orchestration: Looking Beyond Automation

Securing Developer Tools: Argument Injection in Visual Studio Code

Common Mistakes in DevOps Metrics

Recent Comments

No comments

Leave a Comment

We will be happy to hear what you think about this post