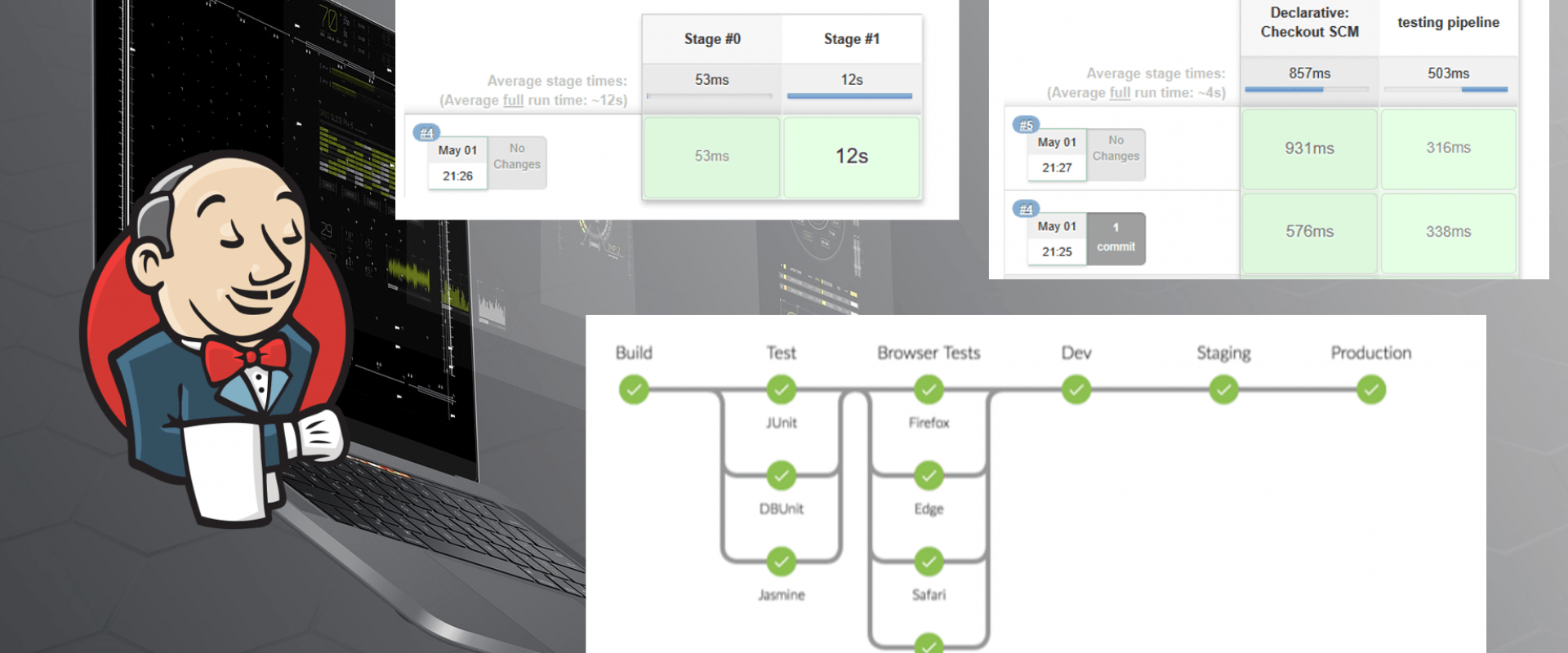

Parallel job in pipeline

Normally a declarative Jenkins pipeline is pretty simple it can look something like this

pipeline {

agent {

label "linux&&x86_64"

}

stages {

stage("Building / Testing") {

stage("Testing") {

steps {

sh("make test")

}

}

stage("Building") {

steps {

sh("make clean && make")

}

}

}

}

}

By using the parallel block

pipeline {

agent none

stages {

parallel {

stage("x86_64 Building / Testing") {

agent { label "x86_64&&linux" }

stage("Testing") {

steps {

sh("make test")

}

}

stage("Building") {

steps {

sh("make clean && make")

}

}

}

stage("s390x Building / Testing") {

agent { label "s390x&&linux" }

stage("Testing") {

steps {

sh("make test")

}

}

stage("Building") {

steps {

sh("make clean && make")

}

}

}

stage("ppc64le Building / Testing") {

agent { label "ppc64le&&linux" }

stage("Testing") {

steps {

sh("make test")

}

}

stage("Building") {

steps {

sh("make clean && make")

}

}

}

}

}

}

Just exec out to script and generate them like in scripted pipelines

Another approach to this is to just exec out to script to run the stages, which would look somewhat like

def arches = ['x86_64', 's390x', 'ppc64le', 'armv8', 'armv7', 'i386']

archStages = arches.collectEntries {

"${it} Building / Testing": -> {

node("${it}&&linux") {

stage("Testing") {

checkout scm

sh("make test")

}

stage("Building") {

sh("make clean && make")

}

}

}

}

pipeline {

agent none

stages {

stage("Building / Testing") {

script {

parallel(archStages)

}

}

}

}

The only issue with this approach is that it’s not fully declarative and basically defeats the purpose of using a declarative pipeline in the first place. It also has to define archStages so far before archStages. If we had multiple stages before this stage you’d be hard-pressed to figure out exactly where archStages actually comes from.

Recent Comments

No comments

Leave a Comment

We will be happy to hear what you think about this post