Tips for Managing Multi-Cluster Kubernetes Deployment with High Efficiencies

Tips for Managing Multi-Cluster Kubernetes Deployment with High Efficiencies

If managing connectivity and fault isolation is some of your key challenges in managing multi-cluster Kubernetes, here are a few experience-backed tips for you.

Kubernetes is emerging as the go-to solution for managing complex app development environments. According to a study conducted by Statista, the market share for Kubernetes technology in 2021 was as high as 5.49%. The study also predicts that by 2024, the revenue figure for such container management services and software would be as high as $944 million.

By helping development professionals manage containerized applications with high efficiency and runtime environment specificities, this technology is paving the way for a hassle-free mechanism for running complex, multi-container environments and parallel development workflows on the same hardware.

Let’s dig deeper and understand how complex app development and deployment environments can be managed better by using the multi-cluster Kubernetes technology.

What Is Multi-Cluster Kubernetes?

Essentially, Kubernetes technology is deployed on a basic infrastructure that has some RAM and CPU to create and deploy containers with applications. Let’s say that this infrastructure is your laptop (termed as a node). A Kubernetes setup running on this node would then contain its own docker and containers, which it would manage.

One level up, consider using two nodes instead of just one to create a greater RAM and CPU capacity for running Kubernetes. This resource-pooling to create a Kubernetes system is termed a Kubernetes Cluster.

Essentially, a collection of pooled IT resources that run a full-fledged Kubernetes system is termed as a Cluster, and many such clusters interacting and working together are called multi-cluster Kubernetes. There are actually quite a few advantages that come with using multi-cluster Kubernetes; let’s see what they are.

Why Use Kubernetes Multi-Cluster?

Though it does get a little more complicated to use multi-cluster systems in many cases, there are quite a few reasons they help save time and improve user experience with an application. The four key reasons to use multi-cluster Kubernetes are as follows.

Improved Scalability

Kubernetes technology helps to scale up or scale down resources based on the success or popularity of an application hosted on its clusters. The fact that these applications can be made available on any node – whether physically or through Cloud – makes them readily available for use by people; as such, loads could be increasingly variable. Multi-cluster Kubernetes manages this scale and availability quite well by spinning up idle resources (by way of replicating container pods when the load is high or recreating dead pods) or even tuning down the resource usage when loads are low.

Additionally, it helps boost performance and reduce latency in loading.

Application Isolation

In a multi-cluster environment, it is possible to isolate workflows pertaining to different activities/stages of development. Dedicating separate Kubernetes clusters for specific purposes helps in diagnostics in case of errors or failure, as reconfiguration or troubleshooting remains restricted only to the concerned or problematic cluster, while the others keep running as-is.

For example, having a dedicated Kubernetes cluster for staging and prototyping can help isolate tweaks to an application by confining all the fixes to just one cluster; a separate cluster for experimentation and innovation helps preserve source code. Additionally, in case of hardware failure or repair, the entirety of operations doesn’t need to halt.

Regulatory Compliance

In the situations where cross-border user data is concerned, the ambit of data privacy and protection poses a challenge for organizations in maintaining compliances. While a single cluster would struggle to make sense of the roots or destination of a piece of user data and its corresponding regulatory compliances, a multi-cluster environment can easily manage that.

Dedicating Kubernetes clusters based on the geographies they serve is one way that organizations can ensure regulatory compliances with the laws prevailing in the region. It also helps in swiftly updating the protocol in case amendments are announced or certain changes are made to the concerned regulation.

Immense Flexibility

Multi-cluster Kubernetes deployment provides improved flexibility of many kinds into the whole ecosystem of application development and use. For instance:

<!--[if !supportLists]-->· <!--[endif]-->Resource allocation is made easier and more yielding when multiple clusters are involved, as more resources can be spun up when required or powered down when not.

<!--[if !supportLists]-->· <!--[endif]-->The flexibility of transferring applications across clusters helps reduce downtime in case one cluster fails – the other clusters can simply step in and cover the deficit.

<!--[if !supportLists]-->· <!--[endif]-->Many users today use multi-cloud services for various applications, as vendor lock-ins are no longer trending. In case better deals or offers are available on a different Cloud, multi-cluster setups allow easy application transfer.

What Is Multi-Cluster Application Architecture?

Multi-cluster Kubernetes environment greatly helps ease out the process of application development. It further helps to understand the two types of multi-cluster application architectures that can be used in the multi-cluster Kubernetes environment; both these skeletons impart different sets of flexibilities and conveniences to the application developer. Let’s see what they are.

Replicated Application Architecture

The simplest method yet to deploy a multi-cluster Kubernetes environment is to create an exact replica of an application across each of the clusters in the Kubernetes system. This way, each cluster would run a clone of the same app; it provides several benefits including:

<!--[if !supportLists]-->· <!--[endif]-->More effective load balancing.

<!--[if !supportLists]-->· <!--[endif]-->Reduced downtime as in the case of a cluster failure, its load can be redistributed to other functional clusters.

To run this architecture, good infrastructure and stable network configuration are the basic necessities. Automated load balancers also make the process more efficient, making the user experience smooth and seamless.

Service-Based Application Architecture

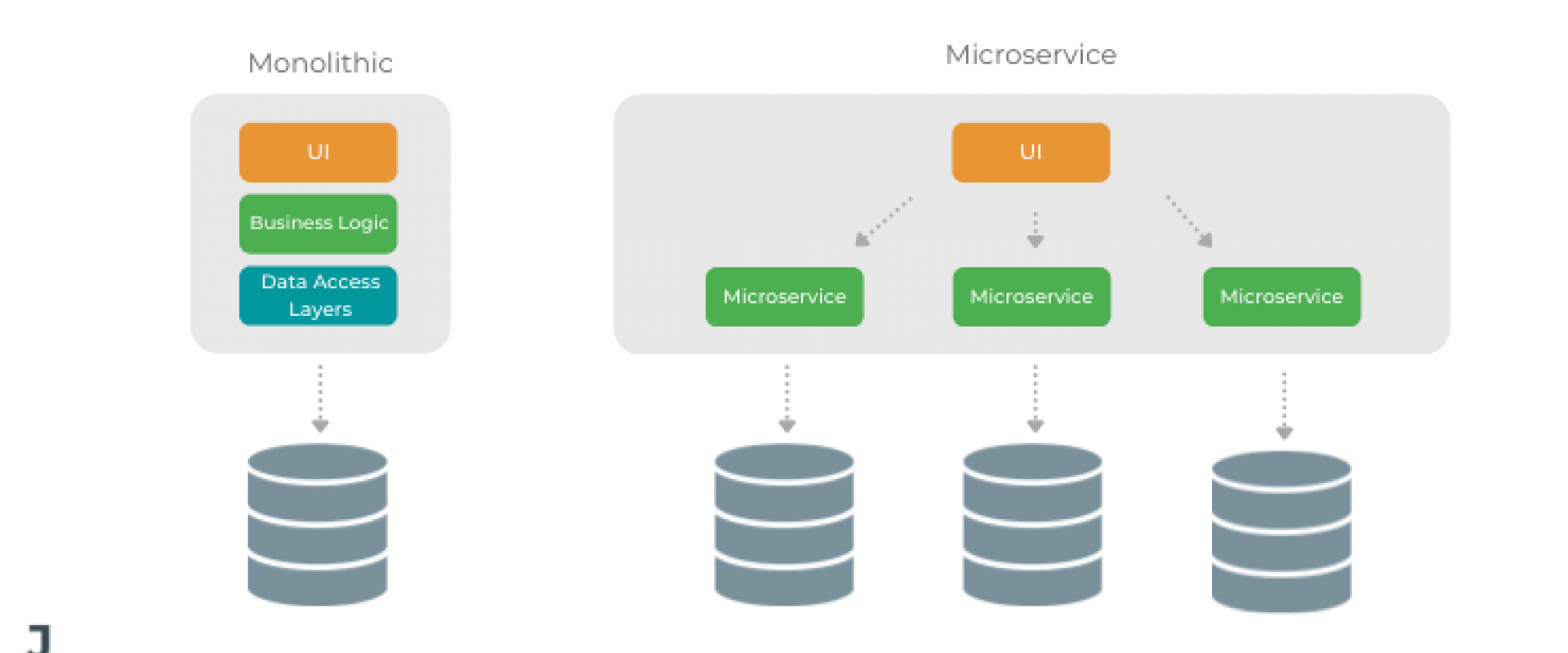

This is the multi-cluster application architecture wherein an application is deconstructed into individual components or services, which are then housed in different clusters. It does increase the complexity of the application and demands an efficient and clear communication layer between the components of the application across clusters.

However, it does provide a better way to manage geographical regulatory compliances with relative ease. It also improves application performance and enhances the security of the whole deal.

Service-based application architecture does escalate operational costs.

Challenges in Multi-Cluster Kubernetes Environment

With all the advantages and savings that the multi-cluster Kubernetes technology brings to the table, certain challenges first need to be scaled that could hinder the true realization of these advantages – especially in the case of service-based application architecture.

Here are the key challenges with this technology, insofar as service-based application architecture is concerned.

Managing Connectivity

Service-based architecture for applications essentially breaks up the app into modules and scatters it across the cluster. In order to have the same setup for, say, different geography, the entire cluster needs to be replicated. In case there is poor connectivity between these clusters, managing the application in this manner can turn into a nightmare.

Deployment and Re-Deployment

As is the case with service-based application architecture, which is more popularly adopted with Kubernetes, deployment and re-deployment can become a little hassling when modifications or updates need to be made. While the architecture itself permits making changes to the specific modules in need, replicating the same across all clusters could pose to be time-consuming.

Load Balancing and Fault Recovery

Replication-based architecture makes it easier to manage server loads by redistributing incoming traffic amongst the functional app clones, whereas it poses a challenge with service-based application architecture. Third-party load balancing tools do a great job at navigating this challenge with relative ease; however, the innate nature of service-based architecture makes things more complicated.

Policy Management

The policy regulates the changes occurring to the Kubernetes environment. With service-based architecture, component-specific policies would need to be applied to specific clusters; in case an update is underway, it could take more time than necessary to have it applied to the multi-cluster components scattered everywhere.

Conclusion

Kubernetes technology makes it possible to deploy large-scale applications and provides a way to manage them effectively, with fewer resources and more efficiently. Certain challenges do make it a little harder; however, there are ways to counter that and embrace the benefits of this revolutionary technology.

ZippyOPS Provide consulting, implementation, and management services on DevOps, DevSecOps, Cloud, Automated Ops, Microservices, Infrastructure, DataOPS, and Security

Services offered by us: https://www.zippyops.com/services

Our Products: https://www.zippyops.com/products

Our Solutions: https://www.zippyops.com/solutions

For Demo, videos check out YouTube Playlist:

https://www.youtube.com/watch?v=4FYvPooN_Tg&list=PLCJ3JpanNyCfXlHahZhYgJH9-rV6ouPro

If this seems interesting, please email us at [email protected] for a call.

Relevant Blogs:

Recent Comments

No comments

Leave a Comment

We will be happy to hear what you think about this post