Are All Kubernetes Ingresses the Same?

The simple answer is yes and no, but the real answer is more complicated.

The simple answer is yes and no, but the real answer is more complicated. There has been a lot written on this topic, and I am taking a shot at making this area more understandable.

Before getting started, it's important that I point out a key fact. k8s Upstream does not provide an Ingress. Like components such as service load balancers and storage, it simply provides the API that the controller should consume to create the functionality described in the k8s resource. An Ingress consists of the controller watching k8s APIs and the proxy engine that is programmed by that controller to affect forwarding.

The k8s Access Pattern

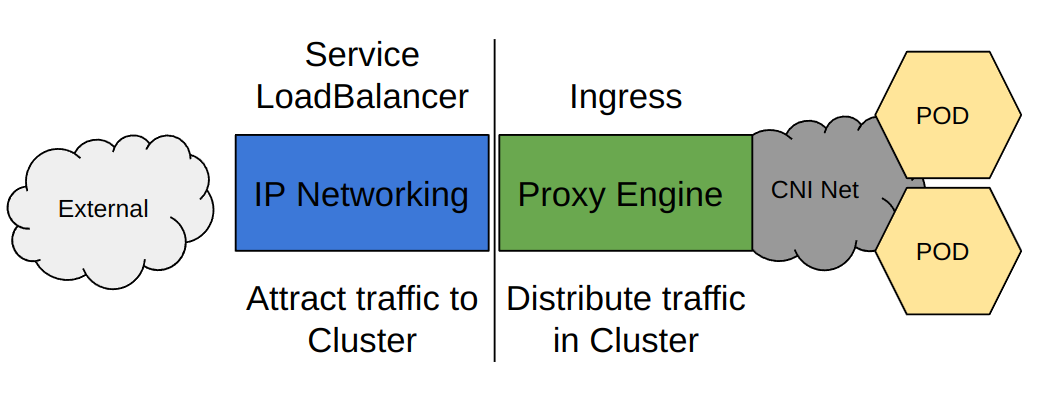

The original access pattern consisted of the Service Loadbalancers and Ingresses. The LoadBalancer attracts traffic to the cluster by adding IP addresses and the Ingress distributes requests to PODs over the CNI network. While they were designed to be used together, they can be used independently.

- Service LoadBalancer only access. Traffic is attracted to the cluster nodes and sent to PODs either directly or via kubeproxy depending on configuration. It's best to think of the Service Loadbalancer as an L3/4 function. It uses IP to forward traffic, and when traffic is received at a single node where a targeted POD is not present, it depends on kubeproxy to get to the other nodes. However, a LoadBalancer-only solution can provide uniform access solution for a k8s cluster.

- Ingress-only access. The Ingress depends on another mechanism to get traffic to the Ingress proxy POD. The ingress distributes traffic in the cluster based on its HTTP routing rules. These rules program a proxy engine in the ingress pod and as it is running in the cluster has direct access to the PODs using the CNI. The Service API has mechanisms other than the LoadBalancer to get traffic to the ingress, but they are all specific to or require configuration of external infrastructure.

Both LoadBalancer and Ingress depend on the Service API, the LoadBalancer is a Service type and the Ingress uses the service to define request Endpoints.

Cloud Provider Ingresses

To make things more confusing, Cloud Providers did not follow the design pattern. Cloud providers integrate their infrastructure resources with Kubernetes using a Cloud Controller, not via independent LoadBalancer, Ingress or Storage controllers.

The Cloud providers already had Load Balancers they called NLB operating at L3/4 so they mapped well to the Service LoadBalancer API. However in addition they had a product they called an Application Load Balancer, or ALB. One of the providers decided that they should map the ALB with the Ingress resource and the others followed suit. However, unlike other k8s ingresses, the ALB is outside the cluster as its a reuse of the network tools used for virtualization.

Most importantly the cloud provider Ingress implementation allocates IP addresses, a task that is normally undertaken in the Service API often using LoadBalancer type. Therefore, in a cloud provider, each is an independent entity. A cloud LoadBalancer is not paired with a cloud Ingress, however, a cloud LoadBalancer can be paired with an in-cluster Ingress.

Are Ingresses API Gateways?

Not really. An API gateway includes functionality that the Ingress API does not support, therefore strictly speaking an Ingress is not an API gateway. There are quite a few functions but a great example is header matching, often used in development A/B testing. The Ingress resource does not support this basic function.

Are All Ingresses the Same?

Yes and no… Any k8s Ingress controllers that are configured using the Ingress API are the same, in that they all have the same functionality. They may be implemented with different proxy engines, but the Ingress API defines the available functionality.

The Truth About Ingresses

With the exception of the CNCF NGNIX Ingress which only supports the Ingress API, all Ingresses are different.

Because the Ingress API lacks key functionality, every Ingress has a unique configuration model. You cannot apply a configuration for a Solo Ingress to an Ambassador Ingress; they use a configuration defined in a Custom Resource Definition (CRD) unique to each implementation.

The expanded functionality required to enable Ingress controllers to operate as an API gateway is well-understood and uniform across the “Ingresses,” however, each Ingress developer has a different view on how to configure an Ingress, each attempting to make it simple according to their definition of simple and target audience.

Examining Ingress Configuration Differences

There are lots of Ingress controllers, and I have looked at a lot of them, however, there are far more than I could accurately compare. I picked three popular Ingresses — Solo.io’s GlooEdge, Traefik’s Proxy, and Kong Kubernetes Ingress — and looked at the Custom Resource Definition-based configuration that qualifies each of these as an API Gateway. Before I go on, they are all great products, none of them have got this wrong, they are all just different.

To show the difference, I’ll include a simple configuration, not possible with the k8s Ingress API that includes URL rewriting. The URL presented by the ingress is /pre and is remapped to /backend the target URL in the POD. Each Custom Resource is easily identified in the apiVersion.

Cloud Providers

The Gateway API also addresses the confusion caused by Cloud Providers implementing the Ingress with a proxy external to the cluster. The GatewayAPI supports external gateways creating per namespace gateways on demand. There are currently two implementations:

- Google Cloud: GKE has support for the Gateway API programming their external proxy engines https://gateway-api.sigs.k8s.io/implementations/#google-kubernetes-engine

- Acnodal EPIC: This is a multi-cloud implementation of API Gateways using the Gateway API. https://www.epick8sgw.io/

You can learn more about the GatewayAPI at https://gateway-api.sigs.k8s.io/ and the list of current implementation status at https://gateway-api.sigs.k8s.io/implementations.

We ZippyOPS, Provide consulting, implementation, and management services on DevOps, DevSecOps, Cloud, Automated Ops, Microservices, Infrastructure, and Security

Services offered by us: https://www.zippyops.com/services

Our Products: https://www.zippyops.com/products

Our Solutions: https://www.zippyops.com/solutions

For Demo, videos check out YouTube Playlist: https://www.youtube.com/watch?v=4FYvPooN_Tg&list=PLCJ3JpanNyCfXlHahZhYgJH9-rV6ouPro

Recent Comments

No comments

Leave a Comment

We will be happy to hear what you think about this post