Automating Data Quality Check in Data Pipelines

Are you looking for ways to automate data quality checks in your data pipelines? Here are some helpful tools that can streamline the process for you.

In a recent survey by Great Expectations, 91% of respondents revealed that data quality issues had some level of impact on their organization. It highlights the critical importance of data quality in data engineering pipelines. Organizations can avoid costly mistakes, make better decisions, and ultimately drive better business outcomes by ensuring that data is accurate, consistent, and reliable.

However, 41% of respondents in the survey also reported that lack of tooling was a major contributing factor to data quality issues. Employing data quality management tools in data pipelines can automate various processes required to ensure that the data remains fit for purpose across analytics, data science, and machine learning use cases. They also assess existing data pipelines, identify quality bottlenecks, and automate various remediation steps.

To help organizations find the best tools, this article lists some popular tools for automating data quality checks in data engineering pipelines.

Importance of Data Quality Check-In Data Engineering Pipelines

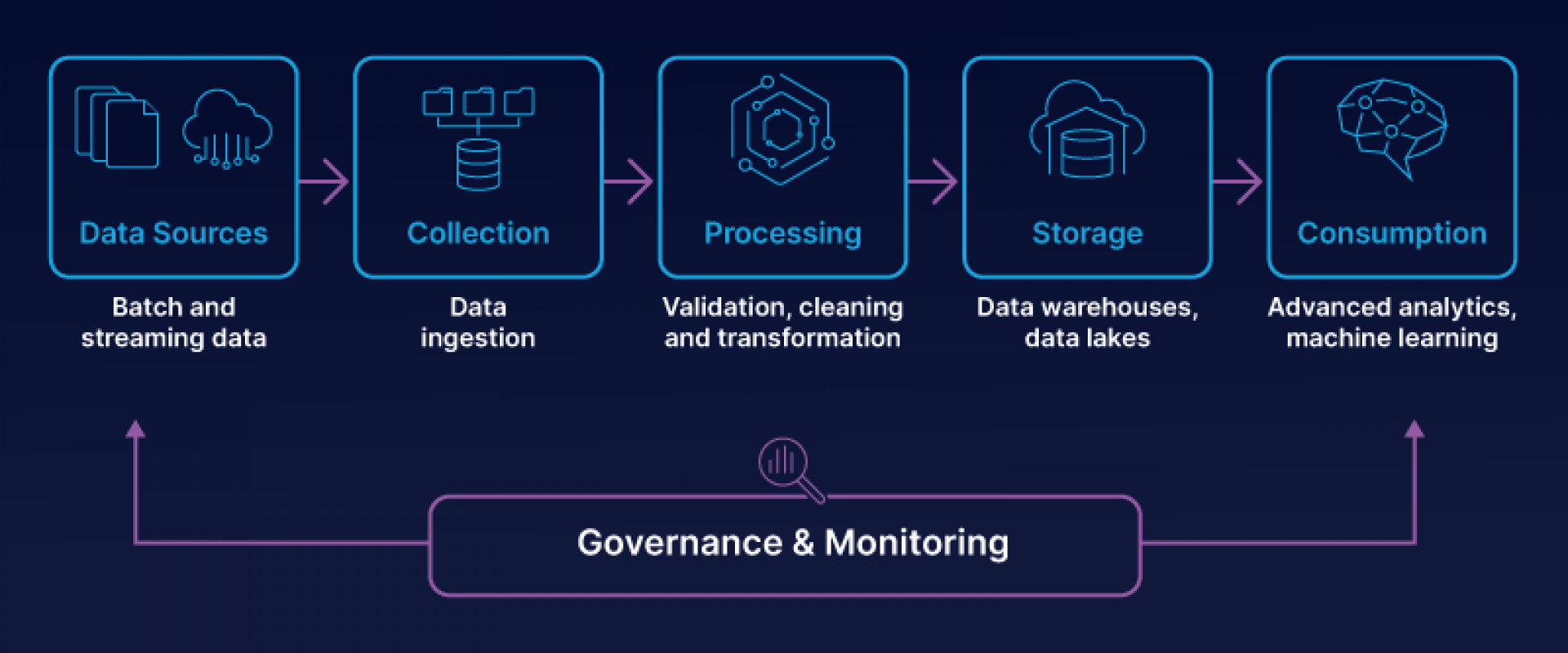

Data quality tools are as essential as other data engineering tools, such as integration, warehousing, processing, storage, governance, and security. Here are several reasons why data quality check is essential in data pipelines:

- Accuracy: It ensures that the data is accurate and error-free. This is crucial for making informed decisions based on the data. If the data is inaccurate, it can lead to incorrect conclusions and poor business decisions.

- Completeness: It ensures that all required data is present in the pipeline and the pipeline is free from duplicate data. Incomplete data can result in missing insights, leading to incorrect or incomplete analysis.

- Consistency: Data quality check ensures consistency across different sources and pipelines. Inconsistent data can lead to discrepancies in the analysis and affect the overall reliability of the data.

- Compliance: It ensures the data complies with regulatory requirements and industry standards. Non-compliance can result in legal and financial consequences.

- Efficiency: Data quality checks help identify and fix data issues early in the pipeline, reducing the time and effort required for downstream processing and analysis.

The data quality checks in the ingestion, storage, ETL, and processing layers are usually similar, regardless of the business needs and differing industries. The goal is to ensure that data is not lost or degraded while moving from source to target systems.

Why Automate?

Here’s how automating data testing and data quality checks can enhance the performance of data engineering pipelines:

- By testing data at every pipeline stage with automation, data engineers can identify and address issues early, preventing errors and data quality issues from being propagated downstream.

- Automation saves time and reduces the manual effort required to validate data. This, in turn, speeds up the development cycle and enables faster time-to-market.

- Automation tools can automate repetitive tasks such as data validation, reducing the time and effort required to perform these tasks manually. It increases the efficiency of the data engineering pipeline and allows data engineers to focus on more complex tasks.

- Data engineers can ensure that their pipelines and storage comply with regulatory and legal requirements and avoid costly penalties by automatically testing for data privacy, security, and compliance issues.

- Detecting errors early through automated checks reduces the risk of data processing errors and data quality issues. This saves time, money, and resources that would otherwise be spent on fixing issues downstream.

List of Top Tools to Automate Data Quality Check

Each data quality management tool has its own set of capabilities and workflows for automation. Most tools include features for data profiling, cleansing, tracking data lineage, and standardizing data. Some may also have parsing and monitoring capabilities or more. Here are some popular tools with their features:

1. Great Expectations

Great Expectations provides a flexible way to define, manage, and automate data quality checks in data engineering pipelines. It supports various data sources, including SQL, Pandas, Spark, and more.

Key features:

- Mechanisms for a shared understanding of data.

- Faster data discovery

- Integrates with your existing stack.

- Essential security and governance.

- Integrates with other data engineering tools such as AWS Glue, Snowflake, BigQuery, etc.

Pricing: Open-source

Popular companies using it: Moody’s Analytics, Calm, CarNext.com

2. IBM InfoSphere Information Server for Data Quality

IBM InfoSphere Information Server for Data Quality offers end-to-end data quality tools for data cleansing, automating source data investigation, data standardization, validation, and more. It also enables you to continuously monitor and analyze data quality to prevent incorrect and inconsistent data.

Key features:

- Designed to be scalable and handle large volumes of data across distributed environments.

- Offers flexible deployment options.

- Helps maintain data lineage.

- Supports various data sources and integration with other IBM data management products.

Pricing: Varied pricing

Popular companies using it: Toyota, Mastercard, UPS

3. Apache Airflow

Apache Airflow is a platform to programmatically author, schedule, and monitor workflows. It provides features like task dependencies, retries, and backfills to automate data engineering pipelines and can be used for performing data quality checks as well.

Key features:

- Modular architecture that can scale to infinity.

- Defined in Python, which allows for dynamic pipeline generation.

- Robust integrations with many third-party services, including AWS, Azure, GCP, and other next-gen technologies.

Pricing: Open-source

Popular companies using it: Airbnb, PayPal, Slack

4. Apache Nifi

Apache Nifi provides a visual interface for designing and automating data engineering pipelines. It has built-in processors for performing data quality checks, such as validating data schema, checking for null values, and ensuring data completeness.

Key features:

- Browser-based UI

- Data provenance

- Extensible architecture

- Supports powerful and scalable directed graphs (DAGs) of data routing, transformation, and system mediation logic.

Pricing: Open-source

Popular companies using it: Adobe, Capital One, The Weather Company

5. Talend

Talend is a comprehensive platform that provides data quality solutions for data profiling, cleansing, enrichment, and standardization across your systems. It supports various data sources, including databases, files, and cloud-based platforms.

Key features:

- Intuitive UI

- ML-powered recommendations to address data quality issues.

- Real-time capabilities

- Automates better data

Pricing: Varied pricing plans

Popular companies using it: Beneva, Air France, Allianz

6. Informatica Data Quality

Informatica Data Quality is an enterprise-level data quality tool with data profiling, cleansing, and validation features. It also provides other capabilities such as data de-duplication, enrichment, and consolidation.

Key features:

- Reliable data quality powered by AI.

- Reusability (of rules and accelerators) to save time and resources.

- Exception management through an automated process.

Pricing: IPU (Informatica Processing Unit) pricing

Popular companies using it: Lowell, L.A. Care, HSB

Conclusion

The above is not a definitive list. There are many other popular tools, such as Precisely Trillium, Ataccama One, SAS Data Quality, etc. Choosing the right data engineering tools for a pipeline involves considering several factors. It involves understanding your data pipeline and quality requirements, evaluating available tools, and their automation capabilities, considering cost and ROI, the ability to integrate with your current stack, and testing the tool with your pipeline.

We Provide consulting, implementation, and management services on DevOps, DevSecOps, DataOps, Cloud, Automated Ops, Microservices, Infrastructure, and Security

Services offered by us: https://www.zippyops.com/services

Our Products: https://www.zippyops.com/products

Our Solutions: https://www.zippyops.com/solutions

For Demo, videos check out YouTube Playlist: https://www.youtube.com/watch?v=4FYvPooN_Tg&list=PLCJ3JpanNyCfXlHahZhYgJH9-rV6ouPro

If this seems interesting, please email us at [email protected] for a call.

Relevant Blogs:

Managing Application Logs and Metrics With Elasticsearch and Kibana

The Effect of Data Storage Strategy on PostgreSQL Performance

Understanding Data Compaction in 3 Minutes

CPU vs. GPU Intensive Applications

Recent Comments

No comments

Leave a Comment

We will be happy to hear what you think about this post